There’s a Secret Behind the Smartest AI Systems… and It’s Called RAG

You’ve seen ChatGPT write code.

You’ve seen copilots drafting contracts, HR policies, even entire project plans.

You think it’s just a smart model?

Think again. 💡

Behind the scenes of today’s most powerful AI systems lies a hidden strategy — a framework quietly transforming how enterprises search, think, and generate answers.

Its name?

🔐 Retrieval-Augmented Generation (RAG).

⸻

🎭 Not Just a Model — A Memory Machine

Here’s the dirty little secret about large language models (LLMs):

They don’t actually “know” your data.

No matter how powerful GPT or Gemini or Claude becomes — they’re trained on static datasets. They don’t know your invoices. Your policies. Your emails. Your SharePoint folders.

And that’s where RAG flips the game.

With RAG, the model doesn’t need to remember everything.

Instead, it retrieves just what it needs — in real time.

It’s like giving the model a live research assistant.

But this assistant doesn’t search Google.

It dives into your own vectorized, indexed knowledge base, curated and ranked by relevance, and whispers the right context to the model at the perfect moment.

⸻

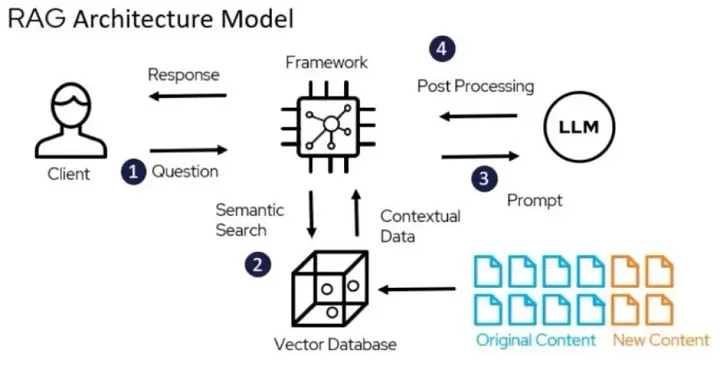

🧠 How It Actually Works (In Secret)

Let’s break the magic into 3 silent steps:

1️⃣ Retrieval:

User asks a question. Before the model answers, a vector search engine (like Azure AI Search) scours internal documents using embeddings. It brings back the most relevant pieces — snippets, paragraphs, even images.

2️⃣ Augmentation:

These retrieved documents are embedded into the system prompt. Now the model is not just guessing — it’s informed.

3️⃣ Generation:

The LLM generates a response based on both its pretraining and the freshly injected knowledge.

Simple?

Yes.

But the impact is revolutionary.

⸻

🎯 Why Are Enterprises Secretly Adopting RAG?

Because traditional models hallucinate.

RAG doesn’t.

Because retraining LLMs is costly.

RAG avoids it.

Because your data is growing.

RAG evolves without retraining — just update your index.

📈 The result?

• Accurate answers

• Real-time reasoning

• Private knowledge control

• Traceable sources (with citations, if needed)

⸻

🌍 RAG in the Wild — From KSA to Global Giants

In Saudi Arabia, regulatory compliance is non-negotiable.

Imagine a RAG-powered assistant that can quote NCA regulations, match internal policies to external frameworks, and give contextual recommendations — all grounded in your own SharePoint, PDFs, and emails.

Across healthcare, finance, education, and government, RAG is being deployed to:

• Replace outdated intranet search systems 🔍

• Power legal copilots with zero hallucination ⚖️

• Build knowledge advisors for frontline workers 👷

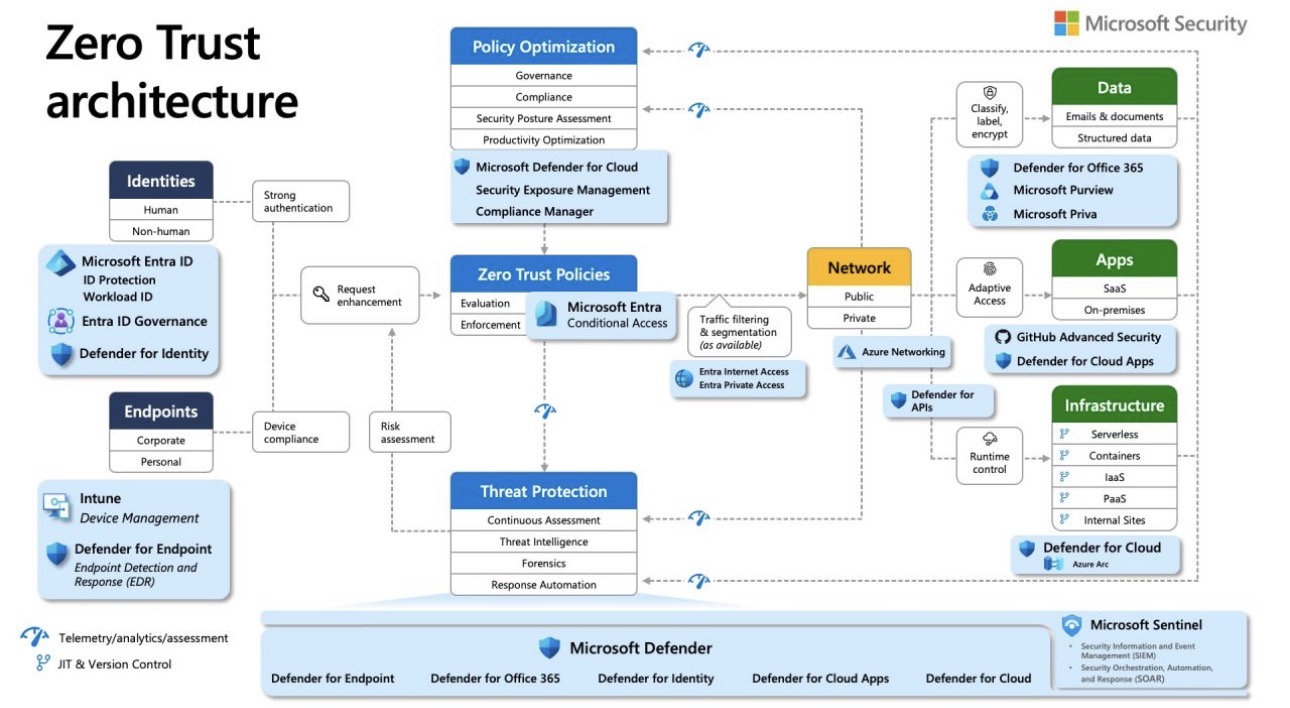

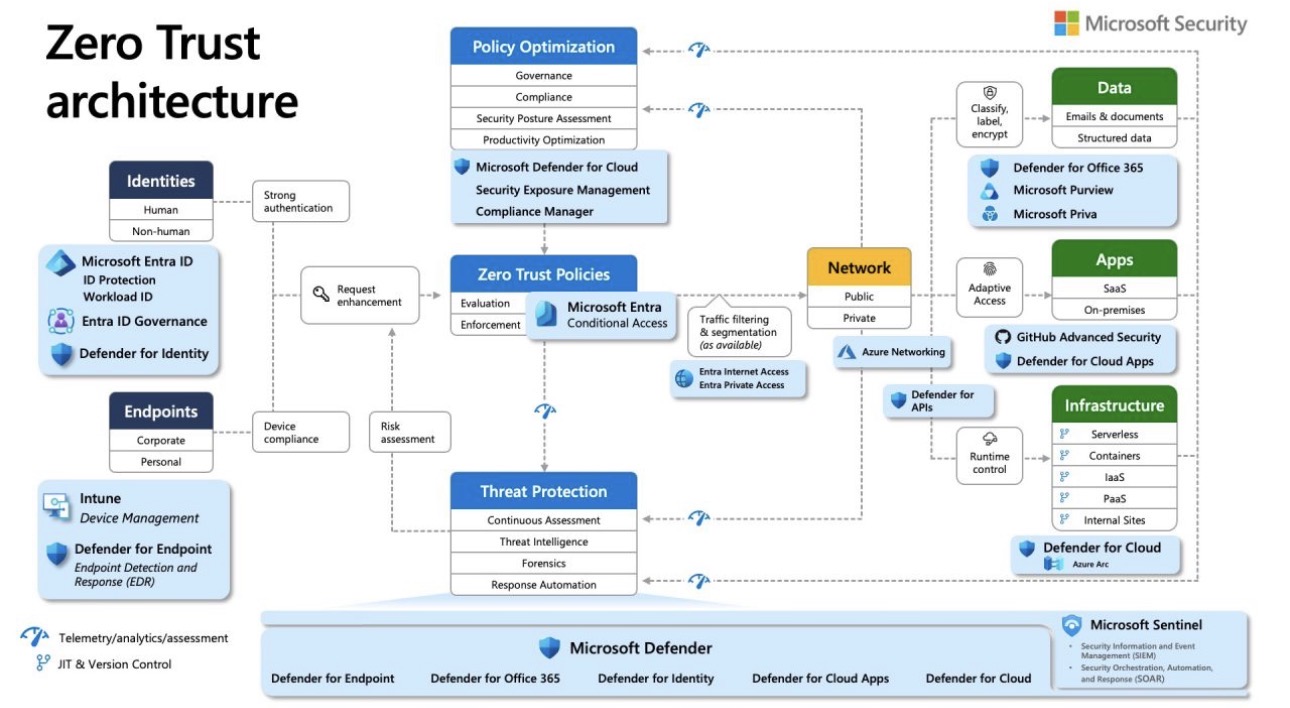

• Enforce security by only retrieving approved data 🔐

⸻

💡 The Architecture Behind the Curtain

Wanna see the components?

• 💬 Azure OpenAI Service → The LLM brain

• 🔍 Azure AI Search → The retriever with semantic ranking

• 🧠 Azure Cognitive Search, Blob Storage, or Cosmos DB → The knowledge vault

• 🛠️ LangChain or Semantic Kernel → The orchestration logic

• 🧾 Citations + Metadata → For traceable, secure outputs

• 🎯 Optional: Azure Purview for data classification and compliance

All orchestrated through APIs, prompts, chains, and zero model retraining.

⸻

🧨 What They Don’t Tell You…

This isn’t just a tech solution.

It’s a strategy.

A RAG-enabled LLM becomes your company’s smartest employee — trained in real time on your ever-changing data, always up to date, always on-message.

But here’s the kicker…

RAG isn’t the future.

It’s already here.

And those who adopt it early?

They’re the ones building AI systems that don’t just sound smart — they are smart.

⸻

📣 Want to Know If Your Business Is RAG-Ready?

• Got unstructured documents? PDFs, Word files, SharePoint, Teams chats?

• Need accurate answers without retraining a model?

• Want to reduce risk of hallucination in compliance-heavy sectors?

• Looking for an AI assistant that’s actually grounded in your data?

Then you’re not just ready.

You’re overdue.

Author: Moamen Hany

#AI #RAG #OpenAI #Azure #LLM #LangChain #MoamenHany #MVP #mvpbuzz #AlnafithaIT